Azure AI search - Retriever strategies - Series

When there is different ways of solving a problem - search is one space that might be solved by different combinations of strategies - <Query, Index, Top-K>

Search for matching “verbiage” seems to be a pretty dominant problem not just for the results but also for the dependent (cascading effect) on content generation.

It increases productivity, improves the search in a streamlined manner, and improves the level of intelligence further road down.

I am about to implement a search process in the forms library where people can be ambiguous, search, and explore - giving the freedom to cater good results.

Stack to experiment: LLMs, embeddings, vector DBs, full text search , metadata-based search, cross encoding models, rule-based heuristics, evaluation

Search involves: Query + Metadata filters, Another aspect of search is using reference documents - uploading the document to be searched and decomposing the document into sections, titles, content, and descriptions.

Reducing the space we need to search is the only way we dilute the problem and represent the user with results on what they want to explore! This can be done in Azure by schema definition on which fields need to be Searchable, Sortable, and Facetable. Filters can either be directed by the user or filters values can be extracted from the query. Irrelevant data don’t even need to be searched across.

Role of metadata to increase the relevance of document, and query pairs:

The retriever trained by Azure AI is optimized for different kinds of searches. From the incoming query standpoint - we can make the retriever more context-aware and improve the precision.

Let’s explore what kinds of synthetic data could potentially add up

Document metadata (Additional information on top-level - document basis)

Query Augmentation (Topics, keywords)

Content metadata (Additional information about a specific chunk of content) - keywords, summary, category, references

Aspects involved - How we talk to vector databases + knowledge base (Retrieval), How we talk to LLM (Generation)

Expectation: Search results with matching verbiage ranked by close similarity. It’s a retrieval problem - we will explore the aspects to consider for a retrieval problem since the question from the user is pretty open-ended.

User aspects: The user can be coming from these worlds - a user who doesn’t know the verbiage in the search index and looking out to explore and find what they need - a user who knows what words or vocabulary to search for to hit so he gets the accurate forms he wished for - a user who is ready to put in conversational questions describing the intent of search in a long free-form question.

Search also drives its way for content manipulation to a whole new purview of content through prompting on top of search results.

Aravind Srinivas from Perplexity spoke about “Search” in many interviews - having a good knowledge-grounded content curation engine is key in the space to extend the horizon of information aggregation.

Aspects to consider about Typos: There can be typos present in the constructed user query where the results sent from the ranking list - contain zero ranked positions from keywords vs the rankings from vector search - the relevance doesn’t make sense in cases where there is always some results or relevance in vector search - that’s the drawback of vector search - you always have some relevance in the search space.

Corresponding user experience: If the user searches for out-of-domain words - vector search ranking precedes it and always gets some results for relevance - there is a balance between when not to show the results and when content doesn’t get matched.

Technical Constraints has a trade off:

1) No calls to GPT to analyze the search query - since adding through a generation call increases the response time.

2) No prompt approach to perform the query understanding without experimenting on how the retriever we use reacts to the search text.

Impact of content chunked in Vector database: The way the content gets chunked and file diversity is very important in search results exploration when compared to search + response or answer generation on top of it. We need good file diversity in Azure search results - in the search results exploration aspect. Consider the insurance domain - for searches we need a good diversity in policy vs endorsement forms - there could be more categories on top of it.

For retrieval to perform efficiently - we need to consider the data indexing part - how documents are chunked before feeding in Azure AI Search. For some aspects, content markdown (to keep the policy structure intact) needs to be an added field apart from just the content that gets fetched.

There are cases where certain page numbers that are expected in the answer are not added in context because of less recall from the vector solution. Hence optimizing for good recall and precision is crucial for a well-grounded answer covering all the concepts around a question.

Graphs to plot - Token Count vs Document index, Token Count Distribution (with and without overlap flag of the content chunk), Content-Length vs Document index (this could help on how much content we need to display in UI if the pipeline stops with search aspect)

Semantic chunking might be applicable depending on the use case - Consider a case where we have endorsement forms for different categories in Insurance - verbiage traverses pretty much throughout various forms and policies with minor changes in additional metadata - Hence before applying the chunking strategy - it is a good baseline to start on subsequent chunks with overlaps.

In some aspects, the contents in Azure AI Search (Passages, Sentences, Propositions) could be decomposed by some LLMs to have better retrieval.

📝 In Azure AI Search, adding a semantic ranker on top of hybrid search results was a bit narrowed, and hence the context was not able to be curated for answers. Hence benchmarking the result set with different strategies is crucial to see the consistency in answers generated.

Azure AI Search strategies vary for Context Curation for generation vs Exploration of just the Search Results. These two areas differentiate the kind of strategies we must use a lot.

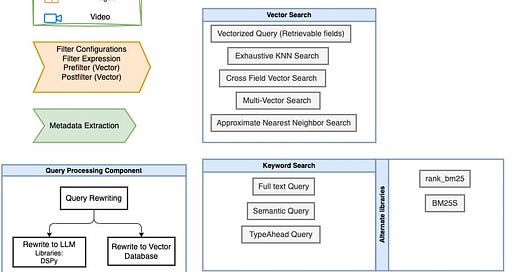

Below are the search strategies in Azure AI Search:

Keyword Search

Vector Search

Semantic Search

Hybrid Search

Each one of the above techniques has its advantages, in the case of keyword search - it has recall challenges - having a vocabulary gap, and it’s hard to understand natural language questions.

Before experimenting with the results of Azure AI Search - We will add a Search Context with the fields we need to explore.

Search context:

Query

Fields

Fields Content Vector

Semantic Configuration

Filter expression

Additional search text if needed (Eg: Topics etc)

Before proceeding with the kind of search, we have to specify the schema definition of search.

Vector Search:

This helps with getting the conceptual similarity for the query. It gets concepts like sentences from queries into high-dimensional vector space. Vectors are mapped based on conceptual similarity and don’t take keywords or exact matches into account. Vector Queries would be ideal for content generation on top of the search results. Distance metrics like Cosine similarity are used to gauge the likeliness. It is more versatile and robust as it always gives some results - since there is always a sentence that is similar in the latent space of embeddings. Having a cosine similarity threshold needs a balance of precision and recall.

Choice of embeddings + quantization efforts (Indexing and querying) = There are different models we can use for embedding, consider Cohere Embed V3 which is specifically tuned for RAG applications. We have to benchmark the retrieval results by comparing the two models.

In Vector search,

Encoder - that represents the text in the latent space - vector embedding

Similar items get mapped closer in the space

Search exhaustively or through K's nearest neighbors

Vector Search Strategies:

ANN Search (Faster), uses HNSW - a graph-based method good for recall - You are trading off perfect recall for the performance

Exhaustive KNN Search - compute distance exhaustively by comparing each one of the distances

K-Nearest Neighbors (KNN):

Exhaustive Search: Compares the query point to every single point in the dataset to find the exact nearest neighbors.

Precision: Finds the true nearest neighbors.

Speed: Slower for large datasets because it checks all points.

Use Case: Best for small to medium-sized datasets where precision is crucial.

Impact of K in Vector Search:

I tried to log the results for a search query on K = 50, 100, 150 to see how results are positioned and ranked.

Pay special attention to very high and very low values of K. A very small K (like 1) can make the model overly sensitive to noise, while a very large K might blur the class boundaries.

Approximate Nearest Neighbors (ANN):

Approximate Search: Uses algorithms to find neighbors that are close enough, not necessarily the exact nearest.

Precision: Sacrifices a bit of accuracy for speed.

Speed: Faster and more efficient, especially for large, high-dimensional datasets.

Use Case: Ideal for very large datasets where speed is a priority over exact precision.

Adding Filters in vector search:

Apart from the filters we mention in the filter expression, we have pre and post-filtering modes, pre is best for selective filtering when there are defined spaces we need to look out for in search. We can also provide multiple vector fields in the Vector search query. You can provide that in documents and also in queries. Consider we have a content chunk and source name - in this case, a search result can be based on both vectors (title and body, title and image)

Prefilter Mode:

The purpose of the filter is to determine the search space of a vector query, whether it’s the entire searchable space or the content of just the search results. Prefilter applies filters before query execution hence the search surface is greatly reduced, over which vector search algorithm is looking for similar content. The prefilter is the default one.

PostFilter Mode:

In postfilter mode, we apply the filter after the query execution is done on the search space.

Context of Vector search:

vector_queries = [ VectorizedQuery( vector=self.generate_embeddings(search_context.query), k_nearest_neighbors=50, fields=vector_field, exhaustive=True, ) for vector_field in search_context.fields_content_vector ]fields_content_vector contain multiple vector search fields like location, content, etc. Above is an example of a Multi-vector search where we query multiple vector fields at the same time by passing numerous query vectors. Differences in embedding models can be configured since we are passing multiple vector queries.

Cross-field Vector Search: In this scheme, we query multiple vector fields at the same time. The same embedding model is only used.

vector_queries = VectorizedQuery( vector=self.generate_embeddings(search_context.query), k_nearest_neighbors=50, fields=”contentVector, sourceVector”, exhaustive=True, )Keyword Search:

While vector search is good in extending into conceptual relationships, and semantic closeness, keyword-based search is ideal for cases where we need exploration of forms library. When you need to find the exact term or phrase and if you know what we are looking for, this is ideal in that scenario—following the precise information that relies on exact matches. People care about this a lot - consider the insurance domain - they need to find that specific policy number document and vector search is a bad idea for that.

Under keyword search, we have the following concepts:

Full-text query

Semantic Query

Typeahead query

Full-text query search: In this scheme, we provide the full-text query that needs to be searched across the documents - It has little room for ambiguity in the search text since we are looking for the exact search text the user gives. Any field that’s given as searchable is used in the search process.

Query type: Simple, Full

In simple, we use it for full-text search. The full-type search is used for advanced query constructs like fuzzy and wildcard search. Simple is the default query parser. It’s mostly used for full-text search. A keyword search that consists of important terms and phrases having an exact match. In the process of “full-text” search, we drop non-essential words like “the”, “and”, “it”. In simple - this acts as a prefix(*) search, search operators where phrases like “pollution” to get pollution-related forms.

Extending the query type - We can use the query type as “semantic” to perform semantic ranking or perform semantic modeling of the query.

Analyze Text call to index - shows how a query or phrase is broken into tokens. It’s used for testing how a text analyzer breaks the text. This analyzer will make strings lowercase, analyser might attribute to stemming lemmatization, or stop word removal. Given larger strings - those are broken down by spaces, and dashes and then indexed as separate tokens in the search process. It is the “tokens” that impact the search query on the index. If the search results are not good, we need to see how the query is getting tokenized and then indexed.

When given query terms or expressions - we use the below scoring algorithm to rank based on the relevance score. Okapi BM25 scoring algorithm is used to produce rankings for the full-text search.

BM25 Scoring Algorithm:

BM25, short for Best Matching 25, is a ranking function used by search engines to determine the relevance of documents to a search query. It builds on the TF-IDF concept and is part of the probabilistic information retrieval framework.

Key Points:

Term Frequency (TF): Measures how frequently a term appears in a document—higher frequency usually means higher relevance.

Inverse Document Frequency (IDF): Gives more weight to rarer terms across the document set, reducing the influence of common terms that aren't particularly useful for relevance.

Document Length Normalization: Adjusts the ranking score to avoid favoring longer documents disproportionately, balancing out their length's effect.

Scoring Formula: BM25 calculates a score for each document based on its term frequency and document length, among other parameters. The parameters

k1(term frequency saturation) andb(length normalization) can be tuned to improve performance.

Formula (Simplified):

Score(D, Q) = sum( IDF(term) * ((TF(term, D) * (k1 + 1)) / (TF(term, D) + k1 * (1 - b + b * (len(D) / avglen(D))))))D: DocumentQ: QueryTF(term, D): Term frequency in a documentIDF(term): Inverse document frequency of the termlen(D): Document lengthavglen(D): Average document lengthk1, b: Tunable parameters

Why Use BM25?

Effective: Offers a balanced and effective retrieval performance, making it popular in search engines like Elasticsearch.

Flexible: Can be adjusted with parameters to suit different datasets and requirements.

Simple query type uses a Simple Query parser that’s looking for a search phrase to be part of the document strings. In the case of full-text search, here is how Lucene Parser works.

Parsing text in Lucene's Query Parser follows a structured approach designed to interpret and translate user input into search queries that can be executed against a Lucene index.

Steps in Lucene Query Parser:

Tokenization

The first step involves breaking down the input text into tokens. These are typically words or terms that the parser will use to construct the query. Tokenization respects whitespace, punctuation, and other delimiters depending on the configured analyzer.

Syntax Analysis

Lucene's Query Parser has a well-defined syntax, allowing it to interpret various query constructs such as:

Term Queries: Basic single-term searches.

Phrase Queries: Sequences of terms enclosed in double quotes, searching for exact phrases.

Boolean Operators:

AND,OR,NOTfor combining multiple queries.Wildcard Queries: Using

*and?for partial matches.Range Queries: Searching within a specific range of values, e.g.,

[20200101 TO 20201231].Fuzzy Queries: Searching for terms similar to the given term using

~.Field-Specific Queries: Specifying the field to search in, e.g.,

title:"Lucene Query".

Normalizing Input

Lucene normalizes and processes tokens for consistent querying. This includes converting terms to lowercase, removing stop words, and applying stemming or lemmatization based on the configured analyzer.

Parsing the Query Structure

The parsed terms and operators are combined to form a query object. Lucene's Query Parser translates the textual query into a cohesive query structure that represents the logical makeup of the search.

Generating the Query Object

The final step is constructing the query object, which represents the parsed query and can be executed against a Lucene index. This object takes into account all the parsed tokens, operators, and structures to deliver results accurately.

In essence, the full Lucene Query Parser is like a strict schoolteacher making sure every part of a query is on its best behavior before letting it loose to find matches in your indexed documents.

Search Mode: This controls precision and recall. If you want to have more recall - to have any parts of the string matched with the query, we go with “any”. For having more precision - where all parts of the string are matched with the query, we go with “all”. For queries containing large text blocks ( fields having content or long descriptions), the search mode of any/all might play a key role.

Searching the Fields that are marked as “searchable” during the creation of the index - It’s ideal to have the same fields for search and selection.

Ordering the results - It can be influenced by adding fields here that are marked “sortable”

Fields that are marked as retrievable will be returned in the search result.

The impact of tokens on queries plays a huge role - since we are thinking if topics are necessary add-ons to narrow the context of user queries.

My thoughts:

Having a delegator component that sends a short-form keyword search vs a long-form semantic search misses many nuances. There is a need for hybrid search which improves by giving a diversity of documents. We also have to see how negation cases like (“not storm” policy forms) needs to be fetched in hybrid search.

Keyword search might lead to having 0 results limiting the search exploration space, vector search - there is always something similar in the vector space hence leading to search results. This is indeed a boon and a bane! We don't want to show documents just for the sake of some approximity. Hence scoring profiles are a factor to play in boosting the relevance.

Hybrid Search:

When we want to utilize the power of both searches, we tend to go towards hybrid, where the query is transformed into a text-based query and vector-based query and results are combined into a set of results. Both the queries run in parallel - the results are then merged, ranked, and re-ordered by the new search scores - we use Reciprocal Rank Fusion to get a single ranked result set.

Search is executed based on text + vector in parallel and the results are then provided to the RRF algorithm. Multiple search result sets are created and then fused based on the ranking algorithm.

The scores from vector search - HNSW Similarity metric, the hybrid query with RRF have different magnitudes. Hence we have to be careful in calculating the relevance percentage. The scores with RRF tied tend to have a smaller score distribution even if the similarity score is high. This is by default the design of the RRF algorithm. Search scores ranked using RRF will not have a high score distribution.

Reciprocal Rank Fusion (RRF):

Purpose: Combines search results from multiple search methods (algorithms) to create a single, unified ranked list.

How It Works:

Rank Assignment: Each result from different methods is assigned a reciprocal rank score—1 divided by (rank + a constant).

Fusion: These scores are combined to produce a final ranking. A higher score means the document is considered more relevant.

Key Points:

Balance: Considers rankings from various methods, giving higher relevance to results appearing on top in multiple lists.

Improves Accuracy: Often results in better accuracy compared to using a single ranking method.

It comes down to two stages of ranking - listwise re-ranking and pairwise re-ranking. In listwise re-ranking - it works with the entire ranked lists from multiple sources and then aggregates into the unified ranking list. In pairwise re-ranking, the document rankings are compared in pairs to determine the final position of the document in the space.

Azure AI Search does’’t allow the weightage of keyword and vector Search to be configured - there are vector databases like Weaviate that specify the split of results to be included in the final ranking - (80/20 or 70/30 split, etc)

RRF is inherently a list-wise re-ranking method, as it aggregates and processes full-ranked lists to determine the final rankings. Below are the strategies vs benchmarks from Azure.

Removing irrelevant results:

We have a similarity score between query and documents that are matched - using RRF score can’t give much of clusters of closer groups and farther groups. We can use cosine similarity to see the groups of results - and we can eliminate the groups from Azure AI Search results that form a farther group from the query. Weaviate gives this feature as “Autocut”.

Semantic Re-ranking:

It adds a layer of re-ranker and it has multilingual capabilities. This helps find extractive answers and captions - This might be useful when adding a document for reference and searching it. This combines vector search, and keyword search plus adding semantic re-ranker on top of it. The results are first passed to RRF and then to the semantic ranker hence having the top of 50 documents is essential to broaden the space of the semantic ranker.

Semantic ranker doesn’t use vectors. Vector search uses RRF and BM25-ranked search results for text queries. Semantic Ranker ranks the search results further by adding a secondary ranking over the initial search results from BM25 or RRF and uses a pre-trained custom LLM used by Bing to get semantically relevant results.

Processing Query Component:

Letting the user query directly infer the Azure AI Search Index could result in a lot of irrelevant results.

Consider a query: “Discuss with me about communication exclusion” → directly sending the query through Hybrid search had results of content starting “About” ranked first, while decomposing the query for important tokens moved the ranking closer to the intent of the query. The ordering of words in the query sent also determines the importance or ranking in retrieval. Hence to optimize the retrieval - the query needs to be optimized for it.

Query Rewriting: The idea is to use LLM to re-write queries for both LLM and the Vector database. To have more semantic meaning for humans, a query needs to be overly comprehensive hence it requires more filler words and exhaustive phrases. For a query to be understood by Vector databases - it all lies on triggering the latent space of action with those words and picking the trained model to rewrite the queries.

The intuition behind query rewriting is adding different perspectives to questions - a rephrased question might get itself matched in layers of AI search results.

Adding a prompt layer approach to query rewriting should be considered in terms of cost + latency - since Search is the starting point of the application, we can expect more calls for search.

Performing a union across the different queries vs retriever results is the experiment I will attach in the next blog.

I read a blog from Shortwave where they provided a detailed process of developing a knowledge assistant through abstraction and thorough definition of tools.

AI Search was one such tool.

Design Aspects:

Highlights from the search query - captions and highlights are provided by the Azure search - that’s for the exact search word match. Semantic phrases can be highlighted based on prompt based approach or using libraries like pytextrank or spacy.

Not everything needs to be go through layer of prompt - I tried to experiment with below prompt and semantic span of highlights was good, but to scale it for larger user base with higher frequency, we need to think of statistical + vectorized approaches.

class SemanticHighlights: def get_highlight_content_prompt(self): highlight_content_prompt = """ Here is the search query: {query} And here is the content to search through: {content} Please follow these steps to highlight exact phrase matches and semantically close matches of the query within the content: Do not say any other text or comment except the content highlights with <mark> tags. Refrain mentioning about Here is the highlighted content, just give the highlighted content alone in <mark> tags. 1. Search through the content to find both exact phrase matches and semantically close matches to the full query. The matches should be case-insensitive, but do not convert the query or content to lowercase. 2. For exact phrase matches, add <mark> tags around the corresponding text in the original content. Additionally, highlight semantically close matches by adding <mark> tags around them as well. Do not alter the case or wording of the original content, just add <mark> and </mark> tags around the matched phrases. 3. If the content with highlighting added is longer than about 4 sentences, truncate it to the 4 most relevant highlighted sentences. After the truncated part, add a plain text summary of the remaining content, followed by an ellipsis (...). 4. The final highlighted content snippet should retain the original casing and wording of the content everywhere except the <mark> tagged portions. Return the final highlighted content snippet in markdown format. If there are no possible exact phrase matches or semantically close matches between the query and the content, return an empty result using markdown format, like this: \`No matches found\`. Focus on both exact phrase matches and semantically similar phrases. Highlight anything that closely resembles the meaning of the query phrase to provide the most relevant results to the user. Output your response in the specified format with highlights using <mark> tags. """ return highlight_content_promptA content Highlight prompt could be created in such a way it spans the highlights across the semantic close match as well. Adding to the consideration for this - adding two GPT calls to the search pipeline adds a response time average of 40 seconds which is too much for the search exploration of the user. Hence adding many layers of prompt would be a constraint in terms of cost + latency.

Average response time on querying the index is approximately 1.5- 2 seconds. Hence to play with different querying strategies vs query rewriting would not exhaust the search pipeline.

Semantic Ranking consists of Semantic Captions with highlights on the most relevant terms and phrases and also semantic answers that are extractive.

Since the aspect of indexing - we chunk the documents and send them over - the possibility of having multiple search results from the same document is higher which rules out the purpose of search library exploration. Hence grouping based on unique files is mandatory based on the use case.

Contents may not be suitable for the search result to be shown and consumed - Like a particular chunk having a Page number extracted text - hence having a content markdown as a separate attribute in the search index alongside content that’s been extracted using the certain library is paramount.

To get a decider prompt on top of the search question - A kind of Query component that delegates the query to different searches based on the nature of the question

To run through the Hybrid Search directly - since the search itself decomposes the question into tokens and applies algorithms on top of it.

Results Exploration:

Keeping a test dataset in this format: endpoints_config.json - that will have the way to run in different environments (local, dev, prod) - that’s the way we can have separate eval scripts on response time, score analysis, retriever results analysis, etc. Hence the changes we perform have good versioning.

{

"dev": {

"status": {

"url": "/status",

"method": "GET"

},

"keyword_searches": {

"without_filters": [

{

"url": "/search",

"method": "POST",

"payload": {

"question": "policy 1389"

}

}

],

"with_filters": [

{

"name": "Policy Updates",

"url": "/search?category=policyupdates",

"method": "POST",

"payload": {

"question": "Fruits policy 1239"

}

}

]

},

"semantic_searches": {

"without_filters": [

{

"url": "/search",

"method": "POST",

"payload": {

"question": "Give top policy forms related to the insurance of cars"

}

}

],

"with_filters": [

]

},

"document_searches": {

}

},

"local": {

},

"prod": {

}

}Leverage Azure AppInsights - Use Kusto Query Language to log the query, endpoint, response time, and retriever results to monitor what queries the user asks for in the system.

Plot the relevance score distribution:

Hybrid Search: In the case of Hybrid Search (Keyword + vector search) sent to the RRF ranker, scores come with different magnitudes. When we want to display the relevance percentage - we need to see the magnitude considerations for the method - For eg: a Hybrid Search running across a set of search queries resulted in the below distribution. Performing different normalizations on top of it results in the same set of distribution, hence it's a little hard to quantify why one result is ranked higher than the other one since the difference of magnitude between the results is very small.

To evaluate the ranking of search results with a query, we can use metrics like MRR (Mean Reciprocal Rank), and NDCG (Normalized Discounted Cumulative Gain). I tried to use a library like ranx to plot the evaluation metrics.

Consideration needs to be given to the magnitude of relevance values - since the distribution is a little low - the scores need to be scaled to calculate the evaluation metrics. We can plot overall evaluation metrics vs query score distribution statistics - but considering the relevance values affect the ranking statistics - below are the results. The scores also lay in 3 buckets as above, hence, the true importance of positioning or ranking a document gets missed. Hence, the selection of relevance of scores or having a distribution to work with is very important.

Experiments to perform:

Use Multi-Query Retriever to perform retrieval across 5 different perspectives of query and find the Union of documents retrieved.

Currently, we have chunks splitter by size of content to embed in Azure AI search - depending on the embedding model. Not much context-aware to have a higher level picture of the document. Context Augmentation strategies like Injecting metadata along with content vector, defining node relationships like chunk with (previous chunk, next chunk, parent summary) - Libraries like GraphRAG to create chunks and set up the same Azure AI Search Retriever.

Different chunking and embedding strategies. Decoupling embeddings from raw text chunks - utilizing summaries of content to then link the corresponding documents.

Generating synthetic queries from raw text chunks using LLM prompt for each document in Azure AI search. Use this labeled data to see the match with the incoming query. This will serve as a good <query, passage> synthetic dataset.

References: